How Slack Built a Secure AI Solution at Scale

Case Study #001: Amazon SageMaker and Strict Data Stewardship Principles

Slack has significantly improved its users' productivity by integrating AI.

In their last year’s The State of Work, they reported that 90% of users who adopted AI are more likely to experience higher productivity.

This case study looks into how Slack achieved this by leveraging Amazon SageMaker and adhering to strict data stewardship principles.

🗃️ Data Sovereignty

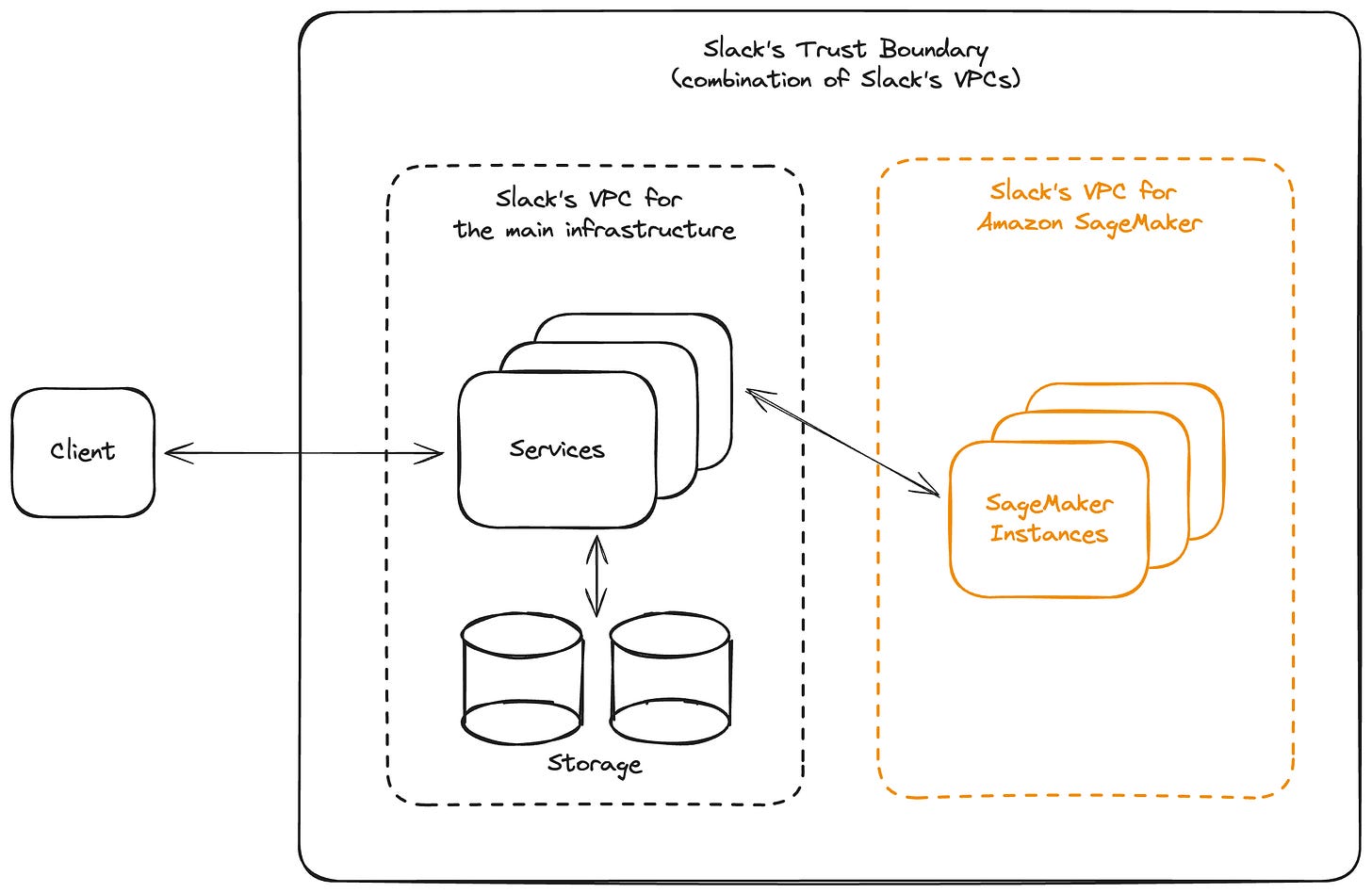

The first and foremost principle Slack wants to ensure is that customer data never leaves Slack’s Trust Boundary, its Virtual Private Cloud (VPC).

Slack prevents third parties from accessing their customer data by hosting large language models (LLMs) within their own AWS infrastructure.

🌐 VPC Hosting with Amazon SageMaker

Amazon SageMaker is a fully managed service that allows you to quickly build, train, and deploy machine learning models. The main workflow of AWS SageMaker is:

Data Preparation: Each LLM needs data on top of which it trains and improves. This data has to be imported, prepared, and preprocessed for training.

Model Training: LLMs use Supervised learning, so they need to be trained. To achieve the desired scale and latency expected from Slack, models have to use managed infrastructure that scales automatically.

Deployment: The models need to be deployed to production with high confidence. They should be decoupled from other parts of the applications to reduce the risk of a single failure taking down the whole system.

The main benefit, though, that Slack leveraged is the ability to deploy new SageMaker instances to a custom VPC that can later be interconnected with the existing Slack VPC. This architecture ensures that only Slack and no one else has access to their data.

🔒 No Training on Customer Data

The second goal has been to ensure that LLMs are not trained on the complete knowledge base of the company because data could leak from workspaces to which the current user does not have access.

Slack decided to use off-the-shelf, generally trained models instead of training custom models on customer data. To provide the models with enough context, they leveraged the concept known as RAG—Retrieval Augmented Generation.

🤖 Retrieval Augmented Generation (RAG)

RAG ensures that the data used to generate a result is ephemeral and task-specific, preventing long-term retention.

It is an advanced technique in natural language processing that produces more accurate and contextually relevant outputs by combining:

Retrieval Component: This component searches for relevant information from the knowledge documents based on the user’s query. The goal is to find contextually relevant data for the generation process.

Generation Component: Using the retrieved documents as context, an LLM produces a contextually relevant response. This ensures the output is grounded in real data rather than being purely speculative.

The combination of retrieval and generation reduces the likelihood of errors and makes AI more reliable for business use. At the same time, RAG allows Slack to use data only for the duration of the task without storing it long-term, thus enhancing privacy.

🔑 User-Specific Data Access

Slack AI only accesses data that the user is already authorized to see. The data privacy is based on Access Control Lists (ACLs).

ACLs contain rules that define to which resources a user is granted access and, at the same time, which operations the user can perform with those resources. For example:

In this case, if Alice wants to use Slack’s LLM to generate a response, she can use only Workspace A as the RAG context. On the other hand, Bob can use both Workspace A and Workspace B, as given by their ACLs.

Summary

By focusing on the principles of:

Data Sovereignty enforced by strict control of data flow via VPCs

Stateless LLMs enhanced with Retrieval Augmented Generation

Privacy enforced by Access Control Lists

Slack successfully integrates AI while ensuring the highest levels of security and privacy in enterprise environments.

Disclaimer: This case study showcases industry best practices based on publicly available information. The specific underlying structure of Slack might be different.

📖 More from Enginuity

In the last issue, we looked into how being a Problem Solver can boost your professional and personal growth, and I shared some tips on how to build this mindset:

The Power of Problem Solving: How To Grow From Senior to Staff+

How you approach problems can significantly impact your career trajectory and personal growth. Two prevalent mindsets stand out: the Problem Bringer and the Problem Solver. Understanding the distinction between these mindsets and transitioning from bringing problems to the table to actively solving them is crucial to making a meaningful impact in your c…

📣 Top picks

7 Tips to Crush Your Onboarding from An Apple Staff Engineer by Jordan Cutler and Akash Mukherjee in High Growth Engineer

How to grow from mid-level to senior Software Engineer by Gregor Ojstersek in Engineering Leadership

How to Manage Gen-Z by Mirek Stanek in Practical Engineering Management

Great article, Samuel and thank you so much for the mention!