How DataDog Processes Hundreds of Terabytes of Telemetry Every Day

Case Study #003: Data Engineering at Scale With Apache Spark and Kubernetes

With over 22,000 customers, DataDog’s observability and security platform stands out as an industry leader. It seamlessly processes vast amounts of data to deliver actionable insights.

But how has DataDog scaled its data engineering platform to handle trillions of data points per day without compromising speed or reliability?

In this article, we look into the technical details that allow DataDog to maintain its scale, providing a detailed look at:

What is Apache Spark, and how it works

Powerful combination of Apache Spark and Kubernetes

Optimizations for processing hundreds of terabytes of data daily

⚡ The Power of Apache Spark in DataDog’s Infrastructure

Apache Spark is a powerful, open-source distributed data processing engine known for its speed and ease of use.

It supports various workloads, including batch processing, streaming, machine learning, and interactive queries, making it a perfect fit for DataDog’s large-scale data processing needs.

Key Features of Apache Spark:

In-Memory Processing: Spark's ability to process data in memory significantly reduces the time required for complex data operations. It retains intermediate results in memory, which is much faster than the disk-based intermediate storage of Hadoop MapReduce.

Rich API: Spark provides high-level APIs in Java, Scala, Python, and R, making it accessible for developers with different expertise.

Fault Tolerance: Through lineage information, Spark tracks the path of data end-to-end, from source to destination. This can help it to recover from faults, ensuring data integrity and reliability.

Scalability: Spark’s architecture can efficiently handle growing volumes of data, which is crucial for DataDog’s scale.

DataDog relies heavily on Apache Spark for its batch processing capabilities, and using Spark, it processes hundreds of terabytes of data daily.

🏗️ Spark’s Architecture

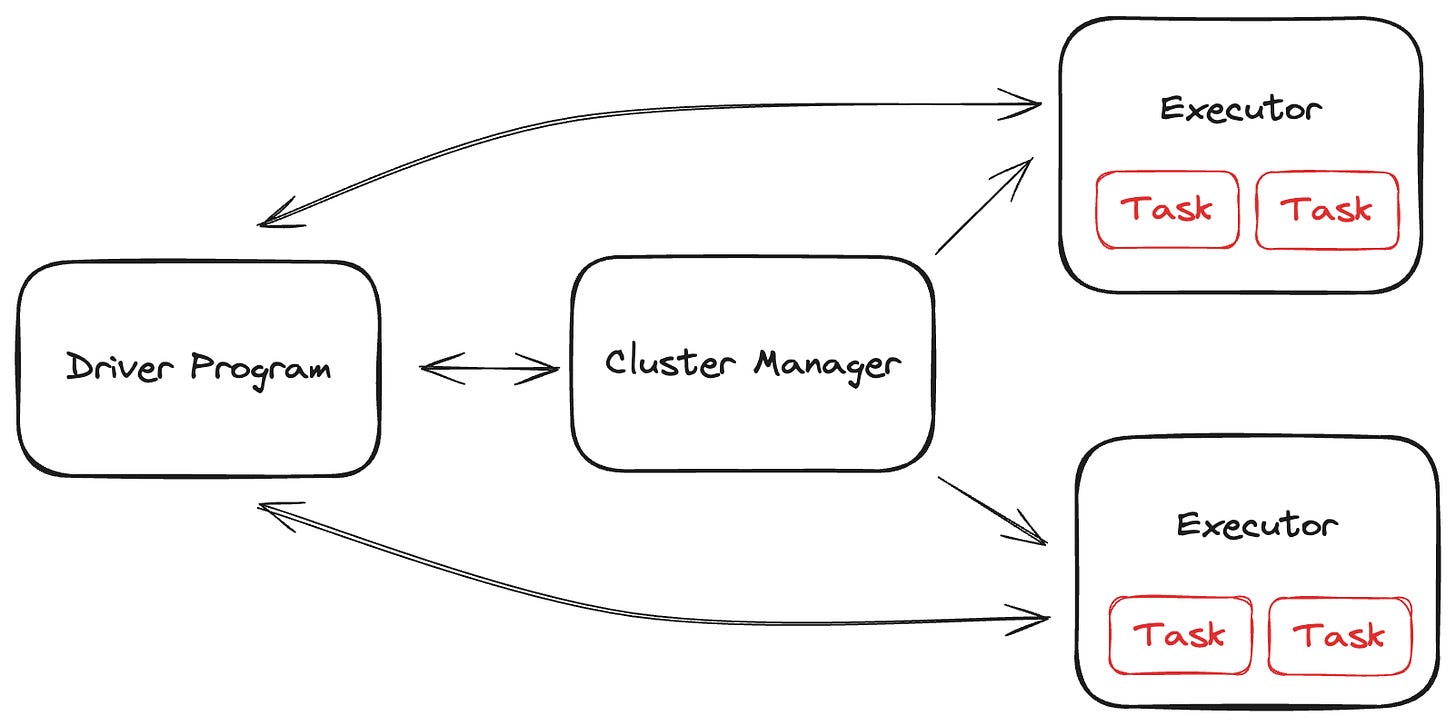

Spark’s distributed architecture consists of:

Driver Program coordinating tasks

Cluster Manager allocating resources

Multiple Executors running the tasks

Data in Spark is represented via a Resilient Distributed Dataset (RDD), a fundamental data structure that models a distributed collection of objects. RDDs are partitioned and distributed across the cluster with three main goals:

Optimizing parallelism

Providing fault tolerance

Minimizing network bandwidth usage

✨ Running Spark on Kubernetes Clusters

DataDog uses Kubernetes to deploy and manage Apache Spark. Kubernetes is an open-source container orchestration platform that allows DataDog to manage resources efficiently across multiple cloud environments.

Before Kubernetes, DataDog integrated directly with:

Azure HDInsights

GCP Dataproc

AWS EMR

By leveraging Kubernetes, DataDog decreased maintenance costs across different cloud providers and gained more control over specific resources allocated to tasks. Kubernetes now acts as a Cluster Manager in DataDog’s Spark architecture:

To further optimize resource management, DataDog uses Apache YuniKorn, a resource scheduler for Kubernetes.

YuniKorn improves resource utilization by efficiently scheduling Spark jobs, preventing resource deadlocks and starvation, and enabling gang scheduling.

YuniKorn’s gang scheduling ensures that all necessary resources for a job are available before execution, minimizing idle time and resource wastage.

⏱️ Optimization Techniques for High-Volume Data Processing

Scaling to hundreds of terabytes per day needs more than powerful tools. It requires strategic optimization to ensure both performance and cost efficiency.

We discussed one such optimization in the previous section — strategic resource allocation in Kubernetes using Apache YuniKorn. Now, let’s look at how DataDog optimizes data transformations in Spark.

Spark Shuffle Operations

A Spark shuffle operation is a mechanism that redistributes data across the cluster. During a shuffle, Spark transfers data from multiple partitions to reorganize and group records based on keys.

While powerful, shuffle operations are resource-intensive and can be a performance bottleneck because the shuffle time grows exponentially with the size of the data being shuffled. At the same time, large datasets can bring the following challenges:

Out of Memory (OOM) Errors: Large shuffle operations can exhaust the memory available to executors, leading to OOM errors.

Disk Swapping: When the data size exceeds the available memory, Spark may need to swap (spill) data to disk, which significantly slows down the process due to increased disk I/O.

Network Overhead: Transferring large amounts of data across the network can introduce significant latency and congestion, further amplifying performance issues.

Choosing Optimal Shuffle Partition Size

To mitigate the challenges of large shuffle operations, DataDog focuses on optimizing the size of shuffle partitions. They found that aiming to keep shuffle partitions around 100s MiB works best for them. This size is a sweet spot that balances memory usage and performance:

💾 Memory Fit: Partitions of this size can typically fit into memory, reducing the need for disk swapping and avoiding OOM errors.

🗃️ Improved Compression: Well-sized partitions can be compressed more efficiently, reducing storage and transfer costs. Too small partitions do not compress so efficiently.

🛜 Reduced Network Requests: By not having unnecessarily small partitions, DataDog minimizes the number of network requests required during shuffle operations, reducing overall latency.

Tungsten format and DSL

Tungsten is an internal row-based format in Spark that minimizes memory overhead by storing data in a compact, binary format, avoiding creating bulky Java objects.

Tungsten supports off-heap memory storage, further enhancing performance by reducing garbage collection overhead.

There are two main ways how to perform operations on top of Tungsten format:

DataFrame DSL (Domain Specific Language) transformations

Lambda transformations

DataDog prioritizes DSL transformations, which operate directly on Tungsten-encoded data and are more efficient than Lambda transformations that require converting data into Java objects.

✏️ Summary

DataDog successfully manages to ingest and process terabytes of data daily by leveraging advanced data processing techniques and maintaining a robust data engineering platform. The core architecture pieces that allow for this scale are:

Apache Spark

Kubernetes cluster management

Apache YuniKorn resource scheduler

Optimizing data partition size and shuffle operations

Off-heap data in Tungsten format and transformations via DataFrame DSL

Disclaimer: This case study showcases industry best practices based on publicly available information. The specific underlying structure of DataDog might be different.

📖 More From Enginuity

In the last issue, we looked into how Netflix scaled its Content Delivery Network to serve hundreds of millions of customers:

How Netflix Delivers High-Quality Streaming to 269 Million Viewers

Have you ever wondered what architecture Netflix must have in place to deliver you a high-resolution full-length feature movie without any delay, almost the exact second as you click the play button? Netflix has been a pioneer in the online video streaming industry for years. It has not just exemplified but

📣 Top Picks

The Ultimate Guide to Becoming a Rockstar Product Engineer by Jordan Cutler and Ian Vanagas in High Growth Engineer

Senior Engineer to Lead: Who to promote and how to train them by Gregor Ojstersek in Engineering Leadership

How Instagram Scaled to 2.5 Billion Users by Neo Kim in System Design Newsletter

5 Domains to Address While Leading Teams by Michał Poczwardowski in Perspectiveship

That's an insightful read about Apache Spark.

Thank you for the shoutout, Samuel!